You’ve built a new feature. Before releasing it to customers, someone needs to verify it actually works the way your business intended. That’s User Acceptance Testing (UAT)—your final safety check before going live. This guide explains UAT in plain language: what it is, when to do it, who should be involved, and the simple tools you need to run it successfully.

TL;DR: UAT at a Glance

If you only have a minute, this section explains UAT testing meaning in simple terms. It highlights what UAT is, when it happens in the UAT cycle, and what you need in place so your UAT experience stays on track.

What it is

Business validation of end‑to‑end flows against acceptance criteria in a production‑like environment.

- Confirms “built the right thing”

- Uses realistic data and roles

- Produces a pass/fail sign‑off

When to run it

Near release, after system/integration testing is green, before go‑live.

- Major features or critical flows

- Regulatory or contractual changes

- Release gates and go/no‑go

What you need

Clear acceptance criteria, a UAT plan, stable staging, seeded data, and a triage loop.

- UAT scenarios + test data

- Defect workflow + owners

- Exit criteria + sign‑off

Table of Contents

Use this quick table of contents to jump to what you need.

- What Is UAT?

- When to Use UAT

- Core Components of a Solid UAT

- Stakeholders and Responsibilities (RACI‑style)

- An End‑to‑End UAT Flow You Can Copy

- Metrics That Keep UAT Honest

- Case Studies

- What the Research Says

- Templates and Spokes (This Hub Links Out)

- FAQ

What Is UAT?

If you are looking for UAT testing meaning, start here. We keep it practical and connect the definition to how teams actually run it day to day.

User Acceptance Testing (UAT) is the final verification step before releasing software to customers. It’s performed by real business users—not just developers or testers—to ensure the software works as intended in real-world scenarios.

In simple terms: UAT answers the question “Did we build the right thing?” (not just “Did we build it correctly?”)

Unlike technical testing that checks if code works, UAT verifies:

- Complete business workflows work from start to finish

- Business rules are followed correctly

- Data stays accurate throughout processes

- The software is actually usable by real people in real situations

- The software is actually usable by real people in real situations

UAT vs. QA vs. System Testing: What's the Difference?

| Feature | UAT (User Acceptance Testing) | QA Testing | System Testing |

|---|---|---|---|

| Primary Goal | Validate software meets business needs and is ready for real-world use | Find defects and ensure quality throughout development | Verify technical requirements and integrations work correctly |

| Who Performs It | Business users, stakeholders, and subject-matter experts | QA engineers and testers | QA/Test engineers |

| When It Happens | Near the end, after system testing, before production release | Throughout development lifecycle | After unit and integration testing, before UAT |

| Environment | Production-like or staging environment with real data | Test environments (can be varied) | Integrated test environment |

| Test Focus | End-to-end business workflows, user scenarios, business rules | Functional and non-functional requirements, defect identification | System behavior, integrations, technical specifications |

| Success Criteria | Business sign-off that software is fit for purpose | All test cases pass, defects are within acceptable limits | System meets technical requirements and specifications |

| Documentation | Business scenarios, acceptance criteria, sign-off forms | Test cases, test plans, defect reports | Technical test plans, system specifications |

| Typical Duration | 2-10 business days for most releases | Ongoing throughout development | 1-4 weeks depending on system complexity |

| Example Questions | Does this work for our business? Can users complete their tasks? | Does this meet the requirements? Are there any bugs? | Do all components work together? Do integrations function? |

If you want a formal definition and a practical view side by side, the ISTQB Glossary explains acceptance testing, and Microsoft Learn shows how teams run UAT in Azure Test Plans:

- ISTQB Glossary: https://glossary.istqb.org/term/acceptance-testing

- Microsoft Learn: https://learn.microsoft.com/en-us/azure/devops/test/user-acceptance-testing?view=azure-devops

If you’re wondering how UAT differs from usability testing (which evaluates ease of use and user behavior), see our deep dive: UAT vs. Usability Testing.

When to Use UAT

Deciding when to run UAT testing can feel fuzzy. This section shows where UAT fits in the UAT cycle and how your UAT experience changes with risk and scope. Run UAT as a release gate when risk to customers, compliance, or revenue is material. Typical triggers include:

Net-new flows or major refactors

Checkout, onboarding, billing, permissions, migrations—anything users interact with directly.

Regulatory/contractual changes

Tax rules, data retention, consent, audit trails—anything with compliance implications.

Integration changes

Payments, identity, ERP/CRM, data pipelines—systems talking to other systems.

Performance/stability concerns

Spikes in error budgets, critical bug hotspots, or areas with a history of issues.

High-stakes releases

Executive commitments, tight launch windows, or features critical to business goals.

Revenue-impacting features

Anything that directly affects how customers pay, subscribe, or transact.

How to decide if you need UAT: Ask yourself these questions:

- Does this change affect critical business workflows?

- Could a defect cause financial loss or compliance issues?

- Are multiple systems or teams involved?

- Is this a new feature that users haven’t seen before?

If you answer “yes” to any of these, UAT is recommended.

Core Components of a Solid UAT

It helps to know what “good” looks like before you start. These building blocks shape the UAT experience for business testers, product, QA, and engineering. Think of them as your minimum viable UAT kit that you can version, review, and reuse.

1) Entry criteria (readiness checklist)

- Integration/system tests are green, known critical defects triaged.

- Stable staging/pre‑prod that mirrors production (feature flags/config aligned).

- Seeded, realistic data (personas, accounts, SKUs, tax regions, permissions).

- Clear acceptance criteria mapped to scenarios.

- UAT roles confirmed (coordinator, business testers, dev/QA on‑call).

2) UAT plan (scope, schedule, owners)

- Objectives and in‑scope flows

- Participants, environments, and data strategy

- Schedule, communications, and reporting cadence

- Defect workflow and SLAs (triage → fix → retest)

- Exit criteria and sign‑off rules

3) Scenarios and test data

- Business‑language scenarios (e.g., “Refund a partially fulfilled order”)

- Edge cases and negative paths (permissions, rollbacks, retries)

- Role‑based coverage (admin, manager, agent, customer)

- Reusable test accounts and seeded records with safe, realistic values

4) Defect management and triage

- Unified intake (one place to log issues, with screenshots/video + logs)

- Severity/impact rules and owners (business + engineering)

- Daily standup/triage during active UAT windows

- Retest loop with explicit acceptance criteria

During UAT, clear defect reports save everyone time. UI Zap collects screenshots, short videos, console logs, and network data in one go. Add the free extension.

5) Exit criteria and sign‑off

- All P0/P1 defects resolved or explicitly deferred with mitigation

- Acceptance rate ≥ threshold (e.g., ≥ 95% scenarios passed)

- Go/no‑go meeting notes captured and stored with the release

- Formal sign‑off by the accountable business owner

Case Studies

E-commerce Platform Payment Gateway Update

Mid-size SaaS CompanyA growing e-commerce platform needed to integrate a new payment gateway to support international transactions. The feature was technically complex, involving currency conversion, tax calculations, and compliance requirements across multiple countries.

The development team completed the integration and passed all technical tests. However, they skipped formal UAT due to time pressure. The feature was released directly to production after system testing showed green lights.

Within 24 hours of release, customer support received 47 complaints about failed transactions in European markets. The issue? The currency conversion logic didn't account for VAT calculations in certain EU countries. The team had to roll back the feature, implement proper UAT with business users from finance and international sales, and re-release two weeks later.

The rollback cost an estimated $125,000 in lost revenue and damaged customer trust. After implementing proper UAT with international stakeholders, the re-release was successful with zero critical issues. The company now mandates UAT for all payment-related features.

Healthcare Portal Patient Scheduling System

Regional Healthcare ProviderA healthcare provider was modernizing their patient scheduling system. The new system needed to handle complex scheduling rules, insurance verification, and integration with electronic health records (EHR).

The system had to work for multiple user types: patients, front desk staff, nurses, and doctors—each with different workflows and needs. Technical testing couldn't capture the nuances of real-world medical office operations.

The team conducted a 5-day UAT with representatives from each user group. They tested realistic scenarios like 'Schedule a follow-up appointment for a patient with multiple insurance providers' and 'Handle a same-day urgent care request.' UAT uncovered 23 workflow issues that technical testing missed, including a critical bug where double-bookings could occur during shift changes.

All critical issues were fixed before launch. The system went live with 98% user satisfaction. Front desk staff reported the new system actually saved them 15 minutes per patient compared to the old system. Zero critical incidents in the first 30 days post-launch.

Stakeholders and Responsibilities (RACI‑style)

Clear ownership keeps UAT moving. These roles define the UAT experience and make the UAT cycle predictable. People often wear multiple hats, but the responsibilities stay the same so decisions don’t stall.

RACI stands for Responsible, Accountable, Consulted, and Informed. It’s a simple way to clarify who does the work (R), who owns the decision (A), who provides input (C), and who needs updates (I) so your UAT cycle doesn’t slow down.

| Role | RACI | Key responsibilities |

|---|---|---|

| Business Owner | A | Defines acceptance criteria; approves scope; signs off; decides on deferrals. |

| UAT Lead/Coordinator | R | Schedules; readies environments and data; runs comms; drives triage and reporting. |

| Business Testers/SMEs | R | Execute scenarios; report defects with evidence; verify fixes; give go/no‑go input. |

| Product Manager | C | Aligns scope to goals; clarifies intent; manages trade‑offs and rollout plan. |

| QA Lead/Engineer | C | Coaches on test design; ensures traceability; helps triage and verify defects. |

| Engineering Lead | C | Commits fix windows; ensures hotfix plan; confirms technical risk posture. |

| Release Manager | I | Applies gates; coordinates approvals; captures release notes and artifacts. |

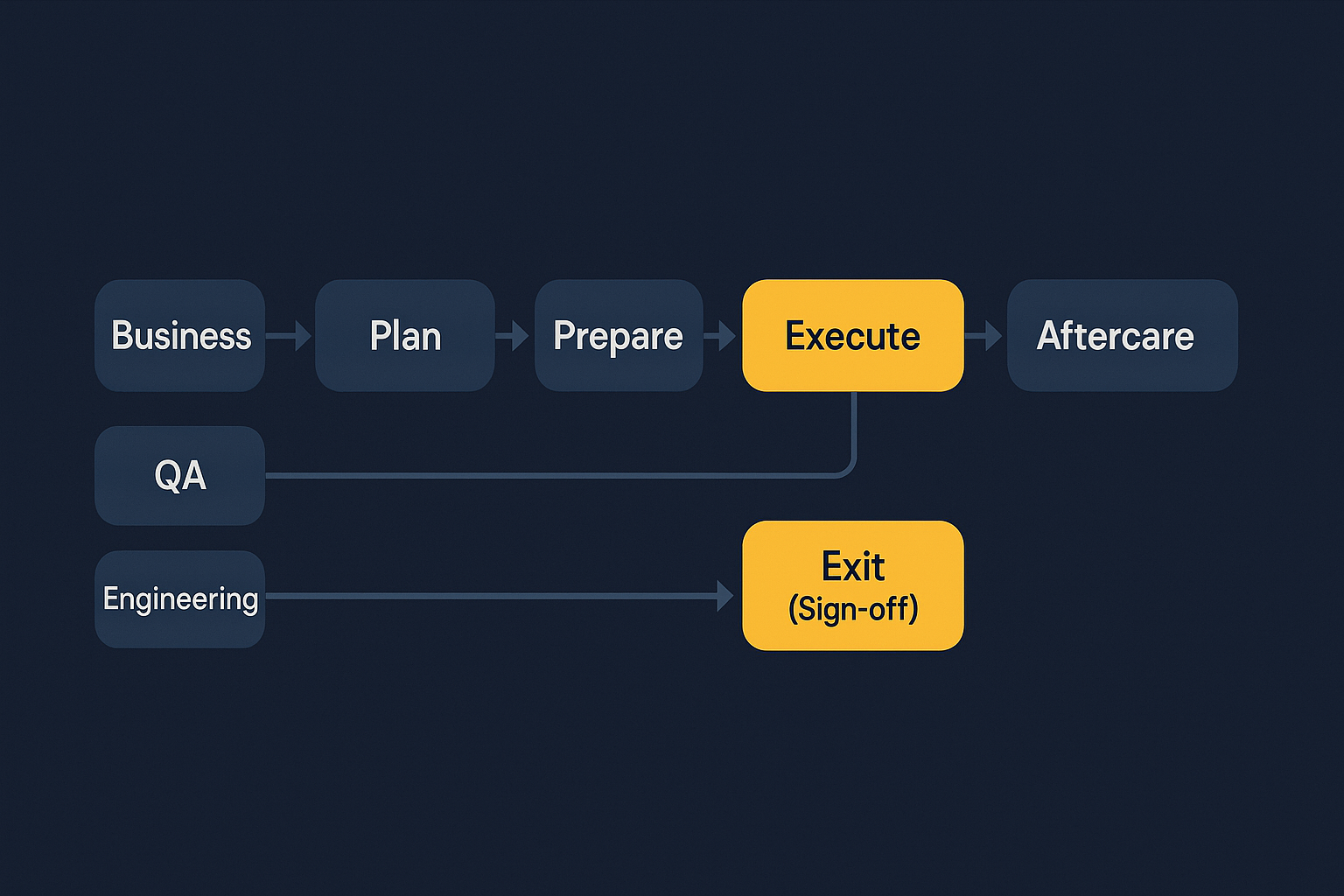

An End‑to‑End UAT Flow You Can Copy

If you need a concrete path to follow, start here. This is the UAT cycle from plan to aftercare, with steps you can scale up or down.

Your UAT Roadmap: From Planning to Production

Plan

1–2 daysConfirm scope, stakeholders, environments, seeded data, and acceptance criteria. Publish the schedule and communicate expectations.

Prepare

1–2 daysCreate scenarios, test sets, and role-based accounts. Align logging/observability and feature flags.

Execute

2–5 daysBusiness testers run scenarios; log issues with evidence; hold daily triage.

Fix & Retest

VariesDevelopers ship fixes; QA verifies; business retests and marks scenarios pass/fail.

Exit

0.5 dayCheck exit criteria; run go/no-go; capture sign-off and publish notes.

Aftercare

2–3 daysMonitor early-life support, error budgets, and incident channels.

Metrics That Keep UAT Honest

Numbers make progress visible and help everyone agree on next steps. These indicators reflect the quality of your UAT experience and the health of the UAT cycle. Track a small set of signals that drive decisions.

- Acceptance rate: % of scenarios passed

- Defect density: Issues per scenario or per hour

- Critical blockers: Open P0/P1 count and age

- Retest cycle time: Time from fix available → retest → pass

- Bug leakage: Production incidents related to the UAT scope

UAT Best Practices: Lessons from the Field

Based on hundreds of UAT cycles across industries, these best practices consistently lead to better outcomes:

1. Start UAT Planning Early

Don’t wait until development is complete. Begin UAT planning during the design phase:

- Define acceptance criteria alongside requirements

- Identify UAT participants early and secure their time

- Set up test environments in parallel with development

- Create test scenarios as features are designed

Why it matters: Early planning prevents last-minute scrambles and ensures stakeholders are prepared.

2. Use Real Data (But Sanitize It)

Production-like data reveals issues that synthetic test data misses:

- Copy production data to staging and anonymize PII

- Include edge cases: special characters, long names, unusual formats

- Test with actual customer scenarios, not just happy paths

- Maintain data refresh processes to keep test data current

Why it matters: Real data exposes integration issues, performance problems, and data quality concerns.

3. Keep Scenarios Business-Focused

Write test scenarios in business language, not technical jargon:

- ❌ Bad: “Verify POST request to /api/orders returns 201”

- ✅ Good: “Place an order with multiple items and verify confirmation email”

Why it matters: Business users can execute scenarios independently without technical translation.

4. Document Everything with Evidence

Every defect should include:

- Screenshots or screen recordings showing the issue

- Step-by-step reproduction steps

- Expected vs. actual behavior

- Browser/device information

- Console logs and network activity (if available)

Pro tip: Tools like UI Zap automatically capture this context, saving hours of back-and-forth.

5. Hold Daily Triage During Active UAT

Don’t let defects pile up:

- 15-minute daily standup with business, QA, and engineering

- Triage new defects: severity, priority, owner

- Track fix progress and retest status

- Adjust timeline if critical issues emerge

Why it matters: Daily triage prevents surprises at the end and keeps everyone aligned.

6. Define Clear Exit Criteria Upfront

Before UAT starts, agree on what “done” looks like:

- All P0/P1 defects resolved or explicitly deferred

- Minimum scenario pass rate (e.g., 95%)

- Performance benchmarks met

- Security/compliance checks passed

- Formal sign-off from business owner

Why it matters: Clear criteria prevent endless UAT cycles and scope creep.

7. Plan for Aftercare

UAT doesn’t end at deployment:

- Monitor error rates and user feedback for 48–72 hours post-launch

- Have a rollback plan ready

- Keep UAT team on standby for quick fixes

- Schedule a retrospective to capture lessons learned

Why it matters: Early-life support catches issues that slip through UAT.

8. Make UAT Repeatable

Document your process so it improves over time:

- Create reusable test scenario templates

- Build a library of test data sets

- Standardize defect reporting formats

- Maintain a UAT playbook with roles and responsibilities

Why it matters: Each UAT cycle should be faster and smoother than the last.

What the Research Says

If you need to explain why UAT testing matters, these independent references are helpful.

Source: IBM: Cost of fixing defects

- Late fixes cost more: IBM has written about how defects found after release are much more expensive to fix than those caught earlier. https://www.ibm.com/docs/en/ibm-mq/9.2?topic=development-cost-fixing-defects

- Active users improve outcomes: The Standish Group’s CHAOS research highlights user involvement as a top success factor in projects. https://www.standishgroup.com/sample_research_files/CHAOSReport2015-Final.pdf

- Testing early reduces rework: Nielsen Norman Group shows that small, early tests reveal issues before they become expensive. https://www.nngroup.com/articles/why-you-only-need-to-test-with-5-users/

- Big launches carry risk without controls: McKinsey documents how large IT projects often miss timelines and budgets without disciplined practices. https://www.mckinsey.com/capabilities/quantumblack/our-insights/delivering-large-scale-it-projects-on-time-on-budget-and-on-value

Figures vary by context and methodology, but the direction is consistent: earlier validation and engaged users reduce risk.

Templates and Spokes (This Hub Links Out)

When you are ready to put this into practice, these templates make it faster to get started. They help standardize the UAT experience and shorten each UAT cycle. We’ll publish them as separate articles and link them here:

- UAT Test Plan Template: scope, roles, schedule, communications, exit criteria

- UAT Scenario Writing Guide: patterns, coverage, and sample data

- UAT Environment Readiness Checklist: staging, flags, data, observability

- UAT Defect Triage Template: severity and impact, SLAs, ownership

- UAT Sign‑off Template: signatories, exceptions, go/no‑go notes

- UAT Exit Report Template: outcomes, metrics, learnings

Also useful while planning: Web Application Testing: 7‑Step Guide.

Smooth UAT: capture clear repro steps in seconds

During UAT, UI Zap auto‑collects screenshots, short videos, console logs, and network data, so business testers can file actionable issues without back and forth.

Key Terms to Know

- User Acceptance Testing (UAT)

- Final verification by business users that software meets business needs and is fit for release.

- Acceptance criteria

- Specific conditions that must be met for a feature or scenario to be considered successful.

- Scenario

- A realistic, business-language description of a task or workflow to test from start to finish.

- Test data

- Prepared records and accounts used to execute scenarios; should be realistic and safe (sanitized).

- Business tester (SME)

- Subject-matter expert from the business who executes scenarios and validates outcomes.

- Exit criteria

- Pre-agreed conditions that define when UAT is complete (e.g., pass rate, no open P0s).

- Sign-off

- Formal approval from the accountable business owner to release, with any exceptions noted.

- Defect

- An issue where actual behavior differs from expected behavior; may include severity and priority.

- Severity

- Impact level of a defect on users or the business.

- Priority

- Order in which a defect should be addressed relative to others.

- Triage

- Daily process of reviewing, classifying, and assigning new defects and tracking fix/retest status.

- Retest

- Verification step after a fix to confirm the defect no longer reproduces and the scenario passes.

FAQ

Have questions as you plan your first or next UAT cycle? These answers cover the common ones teams ask, including quick reminders of UAT testing meaning and how it plays out in practice.

Is UAT the same as QA or system testing?

No. QA/system testing validates correctness against specifications and finds defects early. UAT validates business outcomes and readiness with business users in a production‑like environment.

Who should run UAT?

Business stakeholders and subject‑matter experts execute UAT. QA supports with test design and triage; engineering fixes issues; a business owner signs off.

How long should UAT take?

Most releases reserve 2 to 10 business days depending on scope, risk, and fix cycles. Typical durations: simple features 2–3 days; medium complexity 5–7 days; major releases 10+ days. Always include time for retesting after fixes and 1–2 days of buffer for the unexpected.

What if we find critical issues late?

Pause the release, triage severity and impact, fix or feature‑flag the change, retest, and re‑evaluate go/no‑go. Document exceptions in the sign‑off.

Do startups need formal UAT?

Yes. Lightweight UAT scales from startups to enterprises. Start with a short plan, five to ten scenarios, named owners, and a clear sign‑off.