If you’ve ever mixed up UAT and usability testing or faced delays because of it, you’re not alone. These terms often get used interchangeably, but they answer different questions. One validates that you built the right thing for the business. The other checks if people can actually use it with ease.

TL;DR — The Short Answer

According to usability expert Jakob Nielsen, early testing can reduce development costs by up to 50% by catching issues before they escalate.

User Acceptance Testing (UAT)

Business validation of end‑to‑end flows against acceptance criteria before launch.

- Run late (staging/pre‑prod)

- By business reps/domain experts

- Pass/fail against stories & ACs

Usability Testing

UX evaluation of ease, efficiency, and satisfaction across tasks and designs.

- Run early and often

- With target end‑users

- Behavioral insights & UX metrics

Better Together

Usability prevents late surprises; UAT grants go/no‑go confidence.

- Fewer reworks and delays

- Higher task success & acceptance

- Cleaner handoffs to release

Quick Summary — Side by Side

User Acceptance Testing

- Goal: Meets business needs

- Timing: Near release

- Participants: Business experts

- Metrics: Defects, acceptance rate

- Output: Pass/fail sign‑off

Usability Testing

- Goal: Easy and efficient for users

- Timing: Early and ongoing

- Participants: Target end‑users

- Metrics: Task success, error rate

- Output: Insights and recommendations

Pro tip: Don’t wait for UAT to discover usability flaws. Validate interface comprehension and task success on prototypes first; let UAT validate business rules and data integrity later.

Definitions (No Jargon)

Think of UAT as the final business check before launch, and usability testing as a user-friendly reality check.

- UAT: A structured test led by the business team to confirm that new features meet the original requirements. It covers full workflows, data handling, and rules. The result is a simple pass or fail decision.

- Usability testing: A way to watch real users try out tasks and see what works well or frustrates them. It focuses on how easy and efficient the design is. You get practical insights and suggestions for improvements.

Key Differences You Can Act On

To make this actionable, think of UAT as checking if the house meets the blueprint, while usability testing ensures the family can live in it comfortably.

- Goal: UAT asks “Does it meet business needs?”; usability asks “Can people use it successfully?”

- Timing: UAT occurs near release; usability starts at wireframes and continues post‑launch.

- Participants: UAT uses business owners and domain experts; usability uses target end‑users.

- Artifacts: UAT uses acceptance test cases and data sets; usability uses task scripts, prototypes, and think‑aloud prompts.

- Deliverables: UAT yields pass/fail and defect tickets; usability yields a findings report with prioritized fixes.

- Metrics: UAT tracks defects and acceptance rate; usability tracks task success, time‑on‑task, error rate, and SUS/UMUX.

- Environment: UAT in staging with realistic data; usability anywhere from Figma to production.

Who • When • Output

- Who: Business reps, domain experts

- When: Pre‑release on staging

- Output: Pass/fail sign‑off, defect tickets

- Artifacts: Acceptance test cases, test data

Who • When • Output

- Who: Representative end‑users

- When: Early (wireframes) through post‑launch

- Output: Behavioral insights, prioritized UX fixes

- Artifacts: Task scripts, prototypes, think‑aloud notes

When to Run Each (Timeline)

Run usability tests early to shape solutions; run UAT late to validate readiness.

- Discovery: Usability on low‑fi flows validates comprehension and labels.

- Design: Usability on high‑fi prototypes tunes IA, copy, and affordances.

- Build: Spot‑check usability on dev builds for regressions.

- Pre‑launch: UAT on staging verifies stories, edge cases, and integrations.

- Post‑launch: Usability (again) to benchmark and iterate.

- Regression cycles: UAT scripts become living checks for critical flows.

P.S. Planning usability early and UAT before release? UI Zap helps you capture issues with screenshots or short videos plus console and network context, then share to Jira/GitHub/Slack — no back‑and‑forth. Install the Chrome extension →

Usability tests inform design early and often; UAT provides go/no‑go validation before release.

Inputs, Outputs, and “Done” Definition

UAT

- Acceptance test cases tied to stories

- Seed data and fixtures for edge cases

- Exit criteria (e.g., no open Sev‑1/Sev‑2)

- Sign‑off by product/business owner

Usability

- Recruiting plan and target personas

- Task scenarios and success criteria

- Moderation guide or unmoderated script

- Report with prioritized UX fixes

Shared

- Clear hypotheses and definitions of success

- Issue templates and triage rules

- Owner + timeline for follow‑ups

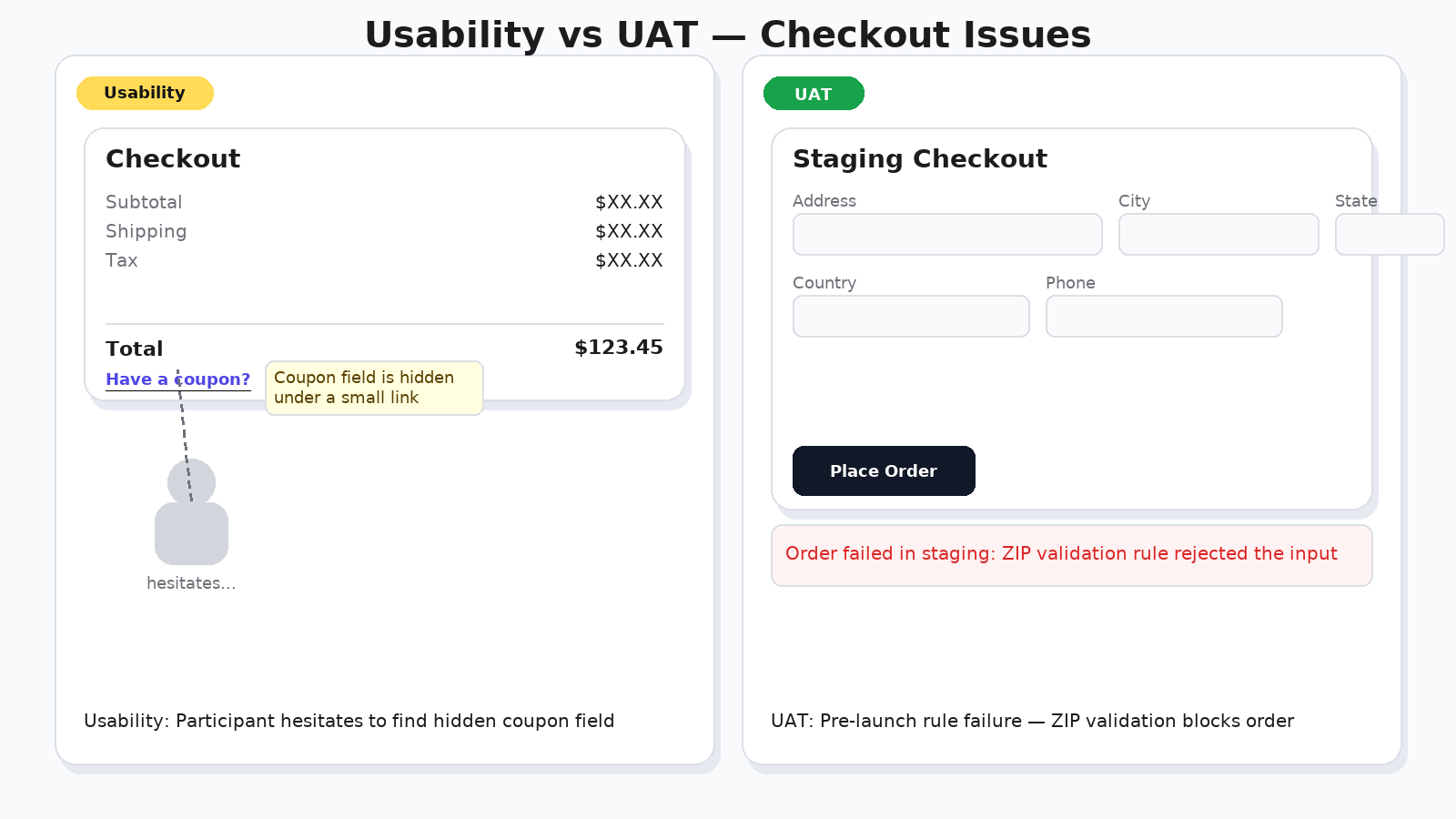

Example Scenario: Checkout Flow

Imagine your team has poured weeks into building a new checkout process for an online store. Here’s how issues might surface in each type of testing:

- Usability finding: 40% of users miss the “Apply coupon” button, leading to frustration and longer completion times. Remedy: Make the field visible by default and use clearer wording.

- UAT finding: The system rejects international ZIP codes, causing orders to fail even for valid addresses. Remedy: Update the validation rules and test cases.

Common Pitfalls (And How to Avoid Them)

We’ve all faced these challenges in product development—here’s how to sidestep them:

- Confusing goals: We’ve seen teams treat UAT like a UX review, only to need major redesigns at the last minute. Fix: Run usability tests before UAT to catch issues early.

- Wrong participants: Using only internal staff for usability can miss real-world problems. Fix: Recruit actual users that match your target audience.

- Binary thinking: It’s tempting to call UAT “passed” even with UX issues lingering. Fix: Include key UX criteria in your acceptance standards.

- No triage: Issues stack up without action, leading to frustration. Fix: Set up a weekly review meeting with templates to assign and track fixes.

- Poor evidence: Vague bug reports slow down resolutions. Fix: Always include videos, logs, and details to help devs fix faster.

- Accessibility as an afterthought: Overlooking this can alienate users. Fix: Build in accessibility checks for both usability and UAT.

- Over‑automation: Automation is great for UAT repeats, but usability needs that human touch. Fix: Balance tools with observation.

A Minimal, Effective Combo Plan

- Define outcomes: business acceptance criteria + UX success metrics.

- Prototype early and run 5–8 participant usability tests per risky flow.

- Instrument dev builds for quick capture (screenshots, console, network).

- Write UAT scripts from user stories; seed realistic data.

- Hold a weekly triage: prioritize, assign, and track to done.

- Run UAT on staging; require no Sev‑1/2 open before release.

- Post‑launch, re‑measure usability and close the loop.

- Continuously fold critical UX findings into UAT scripts to prevent regressions.

Related: Streamline your bug process with our Bug Triage Playbook and ready‑to‑use Triage Templates. And when you do find issues, here’s how to write a great bug report. For more on usability best practices, check out the Nielsen Norman Group guide.

Capture UAT and usability issues with full context

UI Zap records screenshots or short videos and automatically attaches console logs, network data, URL, browser, OS, and viewport — so developers can reproduce and fix faster.

UAT vs. Usability Testing — FAQ

Is UAT the same as beta testing?

No. UAT is a formal, pre-release verification by business stakeholders or client representatives against acceptance criteria. Beta testing is broader, often public, and focuses on gathering feedback under real-world usage. They can overlap but serve different goals.

Can UAT include usability checks?

It can surface usability issues, but it is not a substitute for usability testing. UAT participants are usually domain experts, not a representative sample of end-users, and the outcome is a pass/fail sign-off rather than design guidance.

When should I run usability tests?

Early and often: start at wireframes, continue at high-fidelity prototypes, spot-check during development, and benchmark after release. This prevents expensive late-stage changes.

Who participates in UAT vs usability tests?

UAT: business owners, product owners, support leads, client SMEs. Usability: representative end-users for each key persona and context of use.

Which metrics matter?

UAT: defect escape rate, acceptance rate, severity distribution, cycle time. Usability: task success, time-on-task, error rate, satisfaction (e.g., SUS), and qualitative friction themes.

How do I keep findings actionable?

Use standard bug templates with steps, expected vs actual, environment, severity, and evidence (screens, logs, network). Route through weekly triage and link to owners and due dates.

Where does accessibility fit?

Treat accessibility as first-class: include a11y checks in usability reviews and as part of UAT exit criteria (e.g., WCAG AA for critical flows).

How do UAT and usability testing complement each other in agile development?

In agile, usability testing provides iterative feedback during sprints to refine designs, while UAT ensures the final product aligns with business goals before deployment.

What are the costs of skipping usability testing before UAT?

Skipping it can lead to costly rework; studies show early usability testing reduces development costs by up to 50% (source: Nielsen Norman Group).

Can automation replace usability testing?

No, automation excels in UAT for regression but cannot capture human behavior and satisfaction insights needed for usability.